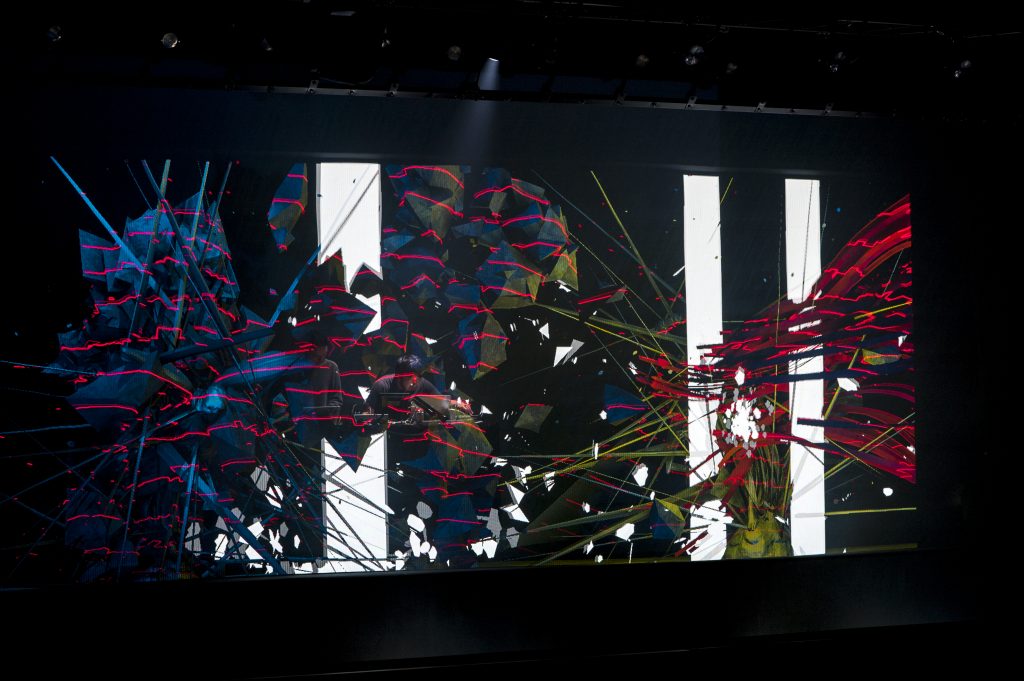

The work of Keijiro Takahashi exists at the orthogonal intersection of videogames, music, filmmaking, and VFX. Takahashi’s regular stream of experiments explores various combinations of tools: the Unity game engine, Depthkit’s volumetric filmmaking software, and recently Roland’s MC-101 Groovebox, to name a few. His results blur the lines between these more siloed creative pursuits, often with connective tissue provided by open protocols like MIDI and OSC.

Takahashi is also a low-level programmer and toolmaker himself. To that end, he makes all his source code available on GitHub for others to experiment with. These include tools for MIDI-based animation sequencing, various holographic, VJ, and motion capture interface tools, and even Grubo, an audiovisual experience created with the MC-101 and Unity. It’s an understatement to call Keijiro Takahashi prolific, both in his creative work and his dedication to sharing it.

You’ve mentioned that you got your start working at a Japanese video game studio. What kinds of games did you work on there, and what prompted you to move away from that industry?

It was mostly the PlayStation 2-era when I was directly engaged in the development of the games. The most well-known are some titles in the Ape Escape series. I belonged to a company dedicated to developing console games, but my interest was more in a “lighter” development style, such as apps for smartphones. So, I left the company and started my work as a freelance engineer when the market for the iPhone in Japan started burgeoning.

At this point, my main work was developing apps and games for smartphones. Then, one day Masaya Matsuura (PaRappa the Rapper, Vib-Ribbon)—a pioneer in the world of music and game production in Japan—invited me to take part in a concert with my visuals. This was an impetus for me to start pursuing visual creation with Unity. My encounter with Matsuura, who remains active in both the game and music industries, meant a lot for what I’m doing today.

What’s something you learned from your work making games that you still apply to your creative practice?

The game industry is an incubator for cutting edge technology and development in realtime computer graphics. Even today, I keep my study and learning based on knowledge from the game industry. I expect the game hardware of the next generation will bring even more breakthroughs and, it will come to fields outside of games.

Do you find yourself trying to find a balance between screens/keyboards, and manipulating hardware? Do you have a strong preference between the physicality of using knobs and sliders, vs. writing code, and using a software GUI? It seems like you spend a lot of time doing all these things.

Fundamentally, I like operating hardware with knobs and sliders. By concentrating all my senses, it gives me a sense of connectivity and “integrated feel,” as if the hardware becomes a part of myself, and it’s a very important factor in artistic expression.

But at the same time, hardware interface design has a problem: The more parameters you have to control, the more complicated operation becomes, and the harder to learn.

To solve this issue, I employed a GUI using multi-touch screens. You can arrange the location of the parameters, and organize them by labeling and coloring for more intuitive operation.

Mostly for operational convenience, I use a GUI a lot, but it doesn’t give me a sense of connectivity and integration. I prefer to use hardware, as much as the situation allows.

What about the art of audio-visual synchronization has surprised you? For instance, it often feels generally like audio and visuals are fighting a battle between “heavy synchronization” and “organic messiness”—both of these can of course be great, but often they work against one another.

In my personal opinion, audio and visuals should be perfectly synchronized. But often, it’s difficult because of technical limitations. I have to leave it to “organic messiness” as a compromised solution.

Often, I collaborate with DUB-Russell. An advantage of working with them is that we can synchronize via the OSC (Open Sound Control) protocol. I can drive the visual effects to be 100% in sync with the beat they create. This synergy creates a uniquely exciting experience.

I can also achieve perfect audio-visual sync in my performance with MC-101. In my recent work Khoreo, musical performance, and visuals automatically synchronize by analyzing the MIDI signals and audio signals of the four individual tracks of the MC-101. I composed the music with this system in mind from the beginning, so I could make the fusion between audio and visual feel natural.

Why do you like about the MC-101, in terms of how it fits into both your music and visual workflows?

Above all, the beauty of MC-101 for me is smooth connectivity with the computer. Just via single USB cable, and it transfers four tracks of MIDI and audio signals without any hassle. It also works via USB bus power, without an extra power cable. The ease of setup is second-to-none.

I was also amazed by its innovative design concept as a Groovebox. In recent years, the trend in Grooveboxes was the combination of retro (virtual) analog synths or samplers and step sequencers. But the MC-101/707 paved the way by employing the latest sound engine to give a huge variety of preset sounds. I had been kind of tired of those recent Grooveboxes, and this was an eye-opener for me. As soon as I started working with it, I realized its creative potential. It stimulated my creativity.

I also appreciate its high-quality effects, the versatility of its Scatter effects, and the intuitive sequencer, among other things. Four tracks might seem like a creative limitation, but it inspires my composition, and also makes synchronization with visuals smooth and accessible.

Perhaps coincidentally, the MC-101 matches with my style more than any other equipment.

You’ve been using Unity for quite a while, and it seems like a centerpiece of your workflow. Has it been a mission of yours to bring more experimental, non-game-focused functionality to that platform?

I don’t have any mission with Unity. Honestly, it’s the best of the tools that I can handle, so I’m staying with it. I stick with the realtime graphics of Unity, as opposed to pre-rendered CG, because I can’t stand the pain of wasting time waiting in the pre-rendering process.

Also, in the process of game development, waiting during building and compiling is inevitable. This process is horrible for me. Wasting precious time and breaking concentration is so inefficient and unproductive for creative workflow. I carefully select the methods I use to minimize the loss of time in my working process with Unity.

So, if you could say I had a mission, it might be to eliminate the waiting time in creative work.

"Four tracks might seem like a creative limitation, but it inspires my composition, and also makes synchronization with visuals smooth and accessible."

Much of your work converges in the field of mixed reality, which directly incorporates volumetric filmmaking and many of the VFX techniques you’re been pioneering over the years. What do you think the future of AR/VR/XR will look like?

Frankly speaking, I’m not very into VR. I learned my methods of visual expression from film and anime. In films and anime, camera and editing are essential. It might not be very obvious, but the motion and camera angles are very important in the visual effects I create. In the realm of VR, the one who experiences these factors controls them. They are out of the control of the creator. For me, this means giving up control of expression.

But I am interested in AR/MR. Even if I have to sacrifice some control, I still see this new frontier as a challenge that I’m approaching with passion.

As a digital artist, how important do you think it is to dive into the lower levels of programming, scripting, and shaders? It seems to come naturally to you. Will the tools become sophisticated enough that artists can focus on the “right-brain” creative aspects?

In my view, lower-level programming isn’t necessary for established workflows but is important in more experimental pursuits. These days, I work on visual effects mainly with the VFX Graph tool in Unity. This is more sophisticated in its design than any other effect systems I’ve ever used, and you don’t need to write programs or shaders to create effects anymore.

Even with this tool, though, it sometimes takes knowledge of low-level programming languages to customize VFX Graph. For instance, I had to develop myself the path to transfer volumetric video into VFX Graph, which had not been originally integrated. Similar cases happen with other tools. Most designers create artist tools based on existing workflows. As a result, new experiments need customization.

With luck, sophisticated tools should be easy to customize. With Unity, basic knowledge of C# should be enough, and many other pieces of software can be easily customized with Python. I wouldn’t say the skills of those programming languages are indispensable for artists but would be a helpful weapon.

You’re always working with a wide variety of cutting-edge technologies and tools. Are there any new areas that you’re particularly interested in digging into?

My recent interest is in realtime ray tracing. Many people see it as a new “dream-come-true” technology, but the application isn’t easy, I don’t think. Still, I’m confident that it will help realize elaborate and expressive lighting techniques not seen before. I can imagine how users might apply it to projection mapping or MR-like integration with lighting in the real world.

"Musicians are physically integrated with their musical instruments. I believe that visual expressions should reflect this expressiveness."

Another field I’m interested in is machine learning. I had an opportunity to study it about two years ago and made projects such as Pix2Pix for Unity and Ngx, but I have to admit that the hardware at the time didn’t have the realtime processing that I needed, so I stopped. Now is the time to start back up.

What would be a dream project for you?

I’ve had a dream to collaborate with musicians with great playing skills — especially pianists, drummers, and turntablists. I have an idea to develop a system to generate visuals in realtime, by using musical instruments as controllers. Not like visuals to accompany the music, but for musicians to “play” visuals. Musicians are physically integrated with their musical instruments. I believe that visual expressions should reflect this expressiveness.

To realize this dream project, first of all, I need to understand musical instruments more. I’m thinking of taking piano lessons.